- A new study led by researchers from IFT and UAM is the first to propose searching for dark matter signals in stellar streams originating from dwarf galaxies.

- The goal is to detect gamma rays produced by the annihilation of WIMPs, hypothetical particles that are extremely difficult to detect and are the most promising candidates for dark matter.

- NASA’s Fermi Gamma-ray Space Telescope (Fermi-LAT) is the key instrument for conducting these measurements.

One of the greatest mysteries and challenges in modern physics is uncovering the nature of dark matter. It is called “dark” because it neither emits nor absorbs light, and we can only infer its presence through its gravitational influence on visible objects around it. Although it has not yet been directly detected, there is extensive evidence suggesting its existence in the universe, accounting for 85% of all matter (with the remaining 15% being the ordinary matter that makes up everything we can see).

Now, a recent study led and entirely developed by researchers from the Institute for Theoretical Physics (IFT UAM-CSIC) and the Department of Theoretical Physics at the Autonomous University of Madrid proposes stellar streams as a novel and promising tool for detecting dark matter. These streams are tubular structures made of stars that move together, resembling “rivers of stars” flowing through galaxies like our own.

Searching for WIMPs in stellar streams

Specifically, this study aims to use stellar streams to detect interactions from one of the leading candidates to explain dark matter: Weakly Interacting Massive Particles (WIMPs). Scientists worldwide have been trying for nearly a century to uncover this enigmatic form of matter, and among all the proposed theories and candidates, WIMPs are one of the best-motivated and most extensively studied in recent decades.

WIMPs are hypothetical, very massive particles that, in addition to experiencing gravity, may also interact via the weak force—one of the four known fundamental interactions between subatomic particles, responsible for certain types of radioactivity. These particles are extremely difficult to detect, making indirect detection one of the most promising approaches. This technique involves capturing the products of their interactions, as WIMPs may decay or annihilate each other when they meet, producing detectable particles such as gamma rays, the most energetic form of light.

Currently, scientists search for gamma-ray signals from dark matter in regions where we expect high concentrations of it, such as the center of our galaxy, galaxy clusters in the nearby universe, and small satellite galaxies of the Milky Way.

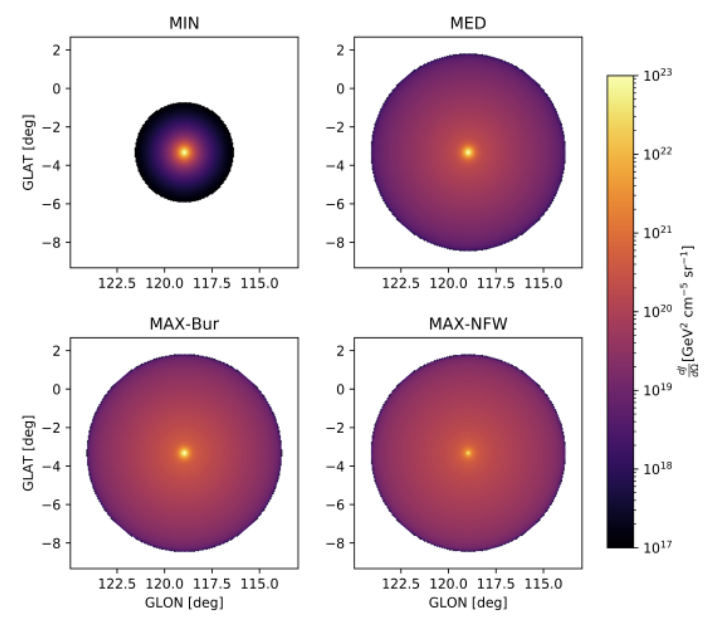

This innovative study, published in arXiv:2502.15656v1, proposes looking for gamma-ray signals from WIMP annihilations in the cores of stellar streams originating from dwarf galaxies. This marks the first time such an approach has been used for dark matter detection.

Stellar streams from dwarf galaxies

Stellar streams form when a galaxy, such as the Milky Way, gravitationally tears apart a globular cluster or a dwarf galaxy orbiting around its center. As a result of intense tidal forces, the object stretches into an elongated, tubular stellar structure, losing mass as it continues orbiting. Over hundreds of millions to billions of years, it may even completely dissolve.

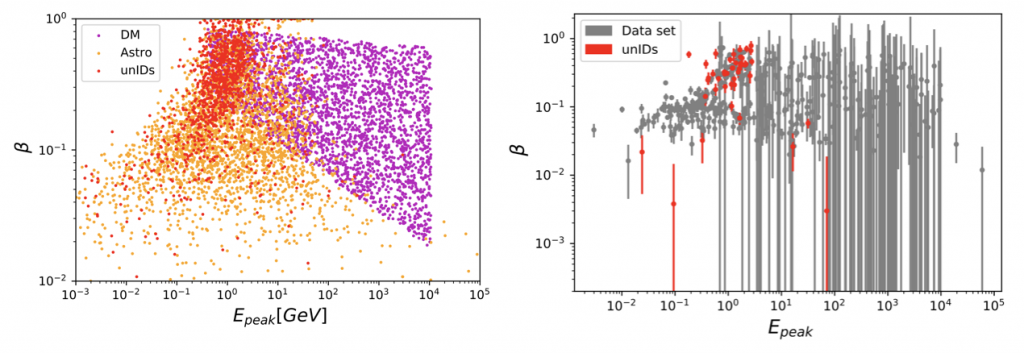

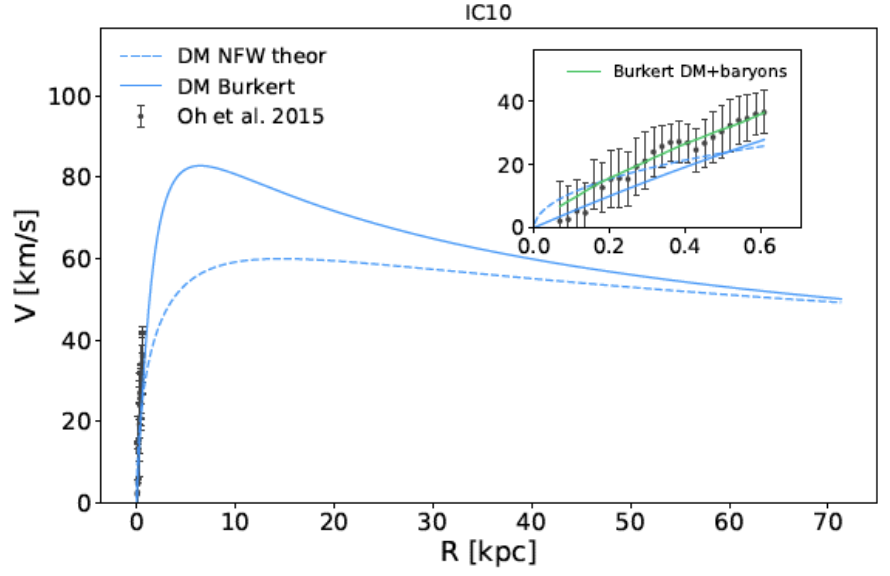

This study focuses on stellar streams originating from dwarf satellite galaxies of the Milky Way, which are the most dark matter-dominated astrophysical objects known. Specifically, a significant amount of dark matter is expected to have survived in the central regions of these objects despite the stretching and mass loss they undergo as they are captured by our galaxy and evolve under the resulting intense tidal forces.

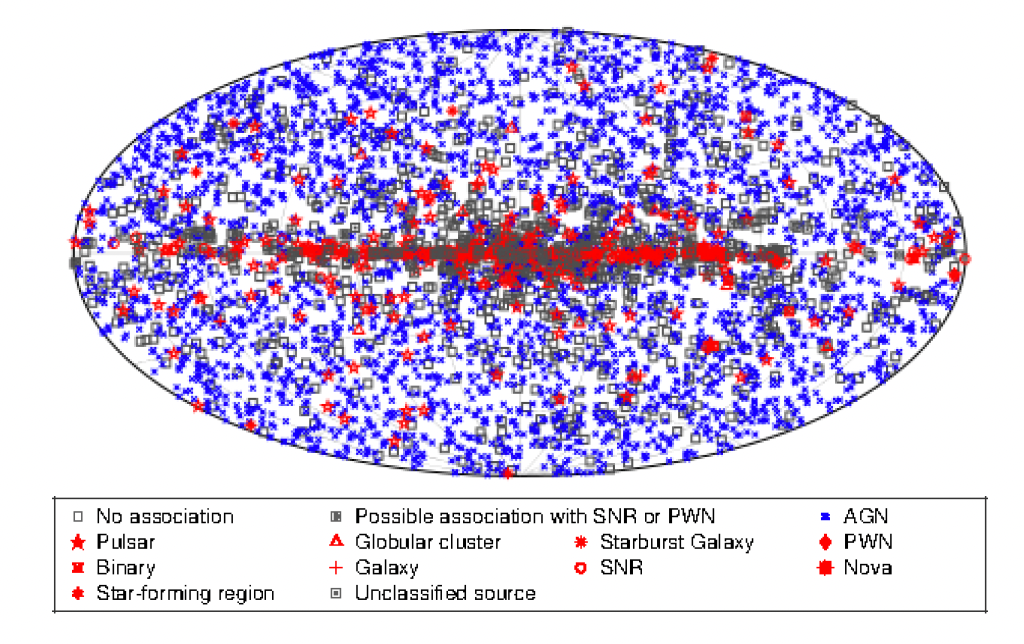

Sky map, in Galactic coordinates and Hammer projection, of the celestial tracks of stellar streams used in the study

The Fermi-LAT Telescope: a key tool

To search for dark matter signals in the form of gamma rays from WIMP annihilations in the core of these stellar streams, the research team used the Large Area Telescope (LAT) aboard NASA’s Fermi satellite.

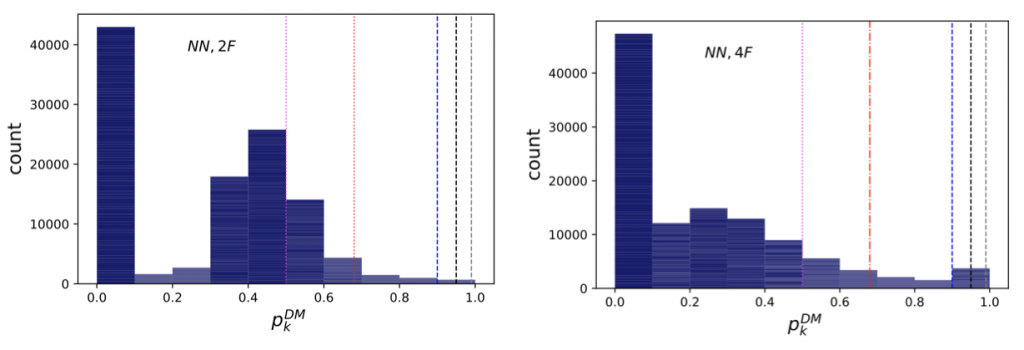

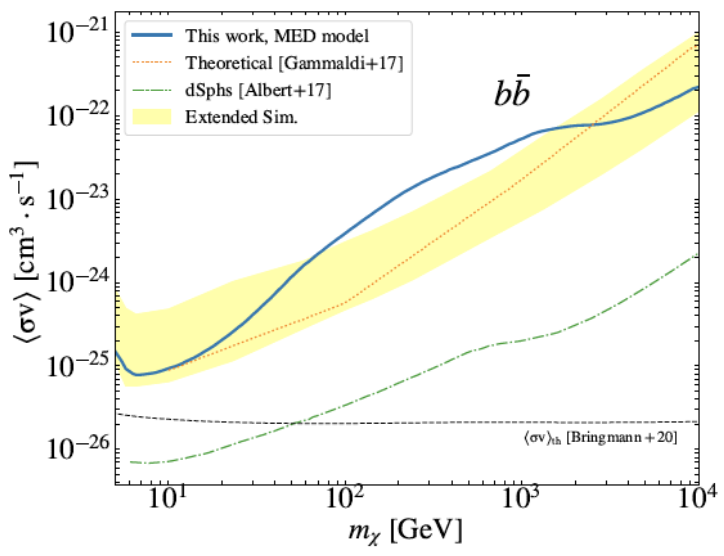

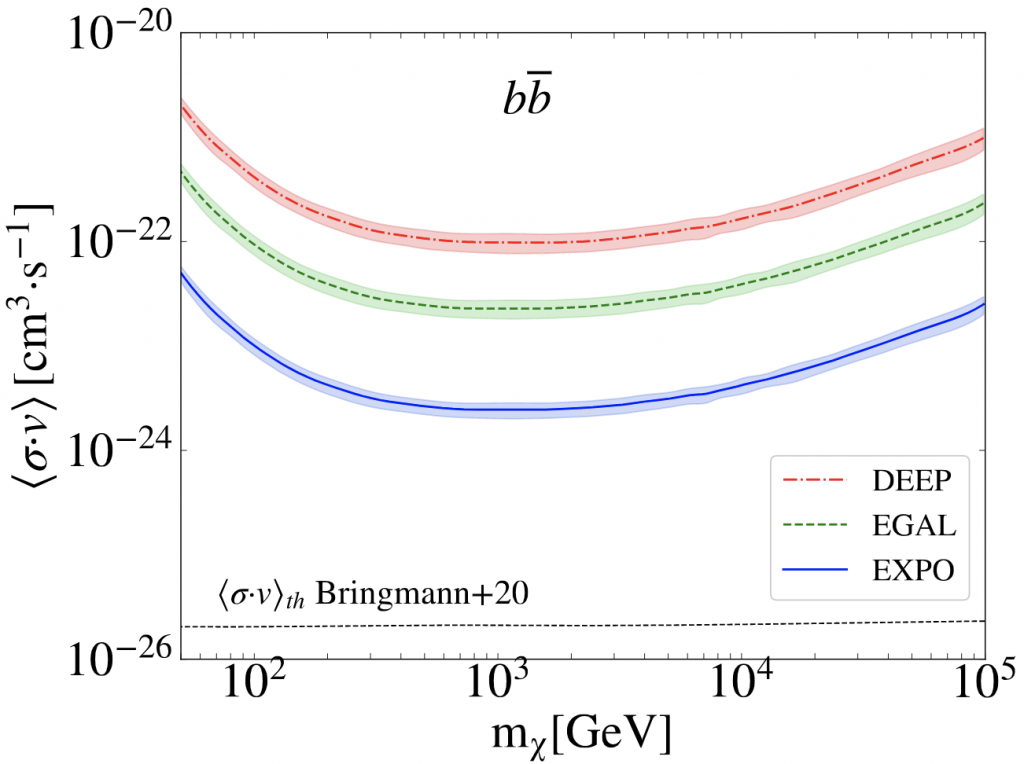

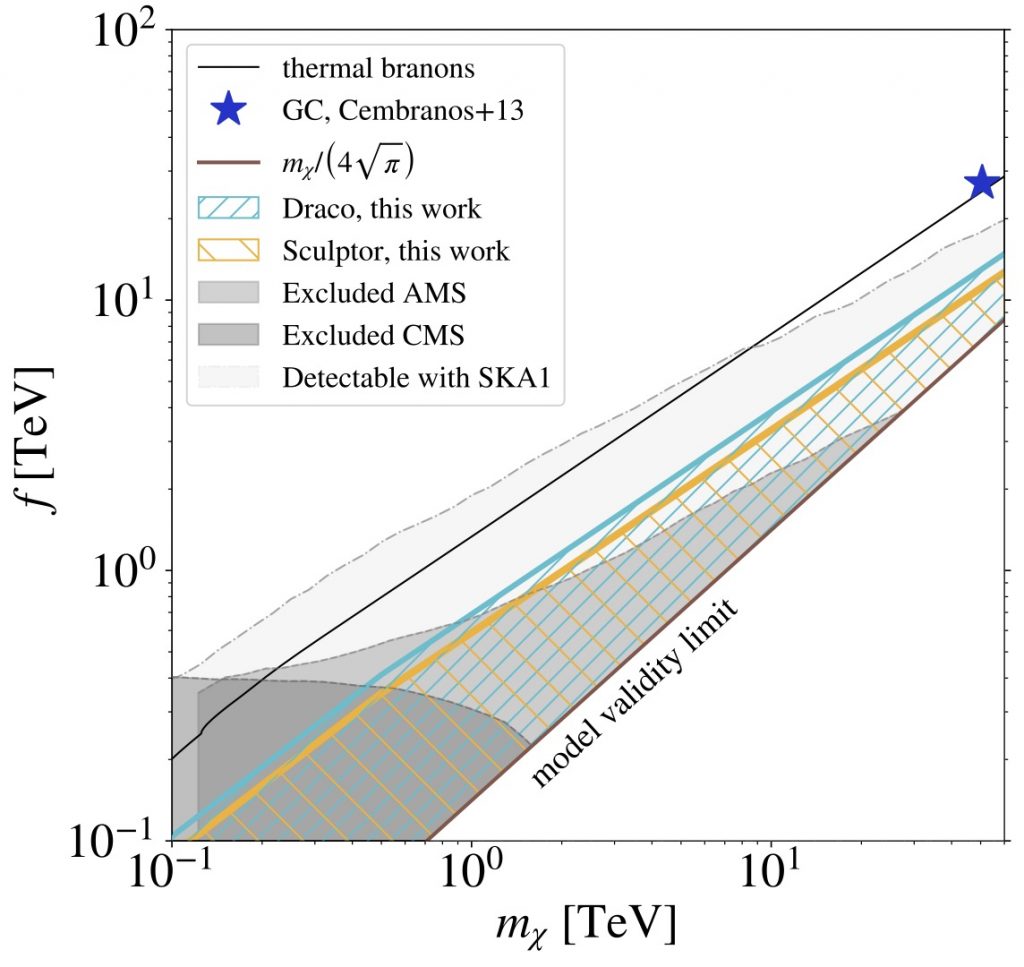

The authors analyzed data collected by the telescope in the direction of a sample of stellar streams, which were specifically selected for this research based on their known properties. Although the data analysis did not reveal a significant signal from these objects, it did allow the researchers to set competitive limitson the mass and interaction rate of dark matter.

According to Cristina Fernández-Suárez, PhD student and lead author of the study: “Even finding no signal brings us closer to discovering what dark matter is, as it helps us rule out what it is not.”

The study’s co-author, Dr. Miguel A. Sánchez-Conde, who is also the current Scientific Coordinator of the entire Fermi-LAT collaboration, added: “During its more than 16 years of operation, the Fermi-LAT gamma-ray space telescope has revolutionized high-energy astrophysics, opening a new window for exploring the most violent aspects of the universe and improving our understanding of it. This study once again demonstrates its potential to lead the search for dark matter today.”

This study represents the first attempt to search for dark matter signals in the form of gamma rays from stellar streams, demonstrating their viability as competitive targets in the search for dark matter. It also highlights their complementarity with other astrophysical objects traditionally used in such searches.

Fernández-Suárez, C., & Sánchez-Conde, M. A. (2025). A search for dark matter annihilation in stellar streams with the Fermi-LAT. arXiv. https://doi.org/10.48550/arXiv.2502.15656